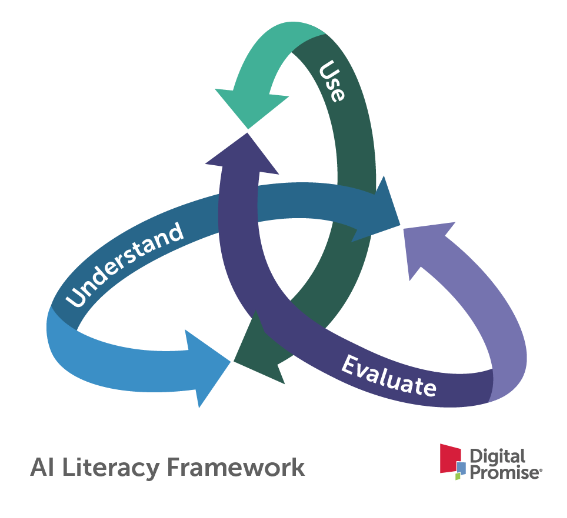

In recent years, there has been an emergence of resources for educators to understand what AI is and how to integrate AI into teaching and learning (see resources below). AI literacy has emerged as a skill set for teachers and students to safely use emerging technologies in teaching and learning. Nonprofit organizations, thought leaders, and school districts have begun to make progress in defining AI literacy, and examples of such frameworks are listed at the end of this blog post. We build on these definitions to present a comprehensive framework for AI literacy that seeks to empower district leaders, teachers, and learners to make informed decisions about how to integrate AI in the world and specifically in education. The framework emphasizes that understanding and evaluating AI are critical to making informed decisions about if and how to use AI in learning environments.

AI literacy includes the knowledge and skills that enable humans to critically understand, use, and evaluate AI systems and tools to safely and ethically participate in an increasingly digital world.

Our AI Literacy framework, pictured below, includes three components: Understand, Use, and Evaluate.

Figure 1. AI Literacy Framework includes three components: Understand, Evaluate, and Use

Understanding AI is an essential component of AI literacy because in order to make informed decisions about using and evaluating AI, users should have a technical understanding of how artificial intelligence uses large datasets to develop associations and automate predictions.

| AI Evaluation Component | Description | Essential Question(s) |

|---|---|---|

|

Transparency |

Supporting users to understand what data and methods were used to train this AI system or tool. |

What AI model and methods were used to develop this tool? What datasets were used to train this AI model? |

|

Safety |

Understanding data privacy, security and ownership. |

How is information being collected, used, and shared? How do we prevent tools from collecting data and/or delete data that was collected? |

|

Ethics |

Considering how datasets, including their accessibility and representation, reproduce bias in our society. |

How is AI perpetuating issues of access and equity? Who is harmed and benefitting, and how? |

|

Impact |

Examining the credibility of outputs as well as the efficacy of algorithms and questioning the biases inherent in the use of AI systems and tools. |

Is this AI algorithm the right tool for impact? Is this AI output credible? How do we center human judgment in decision making? |

| AI Evaluation Component |

Transparency |

|---|---|

| Description |

Supporting users to understand what data and methods were used to train this AI system or tool. |

| Essential Question(s) |

What AI model and methods were used to develop this tool? What datasets were used to train this AI model? |

| AI Evaluation Component |

Safety |

| Description |

Understanding data privacy, security and ownership. |

| Essential Question(s) |

How is information being collected, used, and shared? How do we prevent tools from collecting data and/or delete data that was collected? |

| AI Evaluation Component |

Ethics |

| Description |

Considering how datasets, including their accessibility and representation, reproduce bias in our society. |

| Essential Question(s) |

How is AI perpetuating issues of access and equity? Who is harmed and benefitting, and how? |

| AI Evaluation Component |

Impact |

| Description |

Examining the credibility of outputs as well as the efficacy of algorithms and questioning the biases inherent in the use of AI systems and tools. |

| Essential Question(s) |

Is this AI algorithm the right tool for impact? Is this AI output credible? How do we center human judgment in decision making? |

Table 1. Four components of Evaluating AI, a critical component of AI Literacy

Learn more about AI in K-12 Education

Emerging Frameworks for AI Literacy