AI is still in its early stages of being leveraged in education, and the “AI-Powered Innovations in Mathematics Teaching & Learning” report aims to help those leading innovative equity work understand that they are not alone in addressing the challenges of using technology to go beyond the status quo and create learning opportunities that enable every student to thrive. This report explores how education leaders, product developers, and nonprofit and philanthropic organizations aspire to leverage AI to support their work in math teaching and learning, based on the responses from a Response for Information issued in the spring of 2024 to understand the current and upcoming landscape of AI in education.

An analysis of the 178 responses found that many innovators are excited to leverage AI for student support (52%) and teacher support (39.3%). The use of AI has the potential to enable edtech tools to create personalized and adaptive learning opportunities unique to each individual learner by generating content in response to inputs, namely students’ interests. There was significant optimism that this approach could be harnessed explicitly to support students’ motivation and engagement.

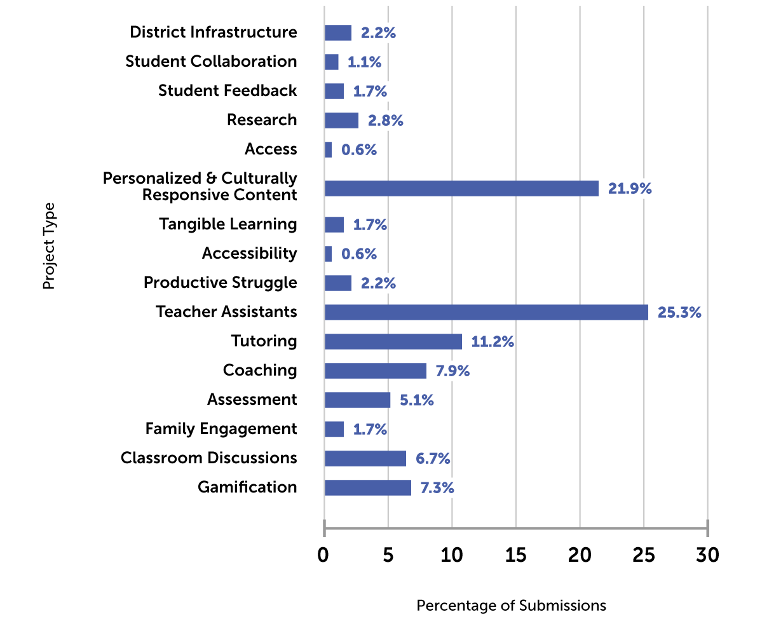

Figure 1. Percentage of submissions by project focus (N=178 submissions).

Similarly, many submissions intend to explore using AI to reduce teacher workload, enabling educators to spend more time building relationships with students and personalizing instruction. AI can be leveraged for real-time recommendations for instruction and to support teachers’ professional learning. The two most common project focus areas from these responses were teacher assistants (25.3%) and personalized and culturally responsive content (21.9%) (Figure 1). With the use of AI, our education system can provide a level of granularity, immediacy, and flexibility to meet each teacher and student where they are, which has not yet been possible in education.

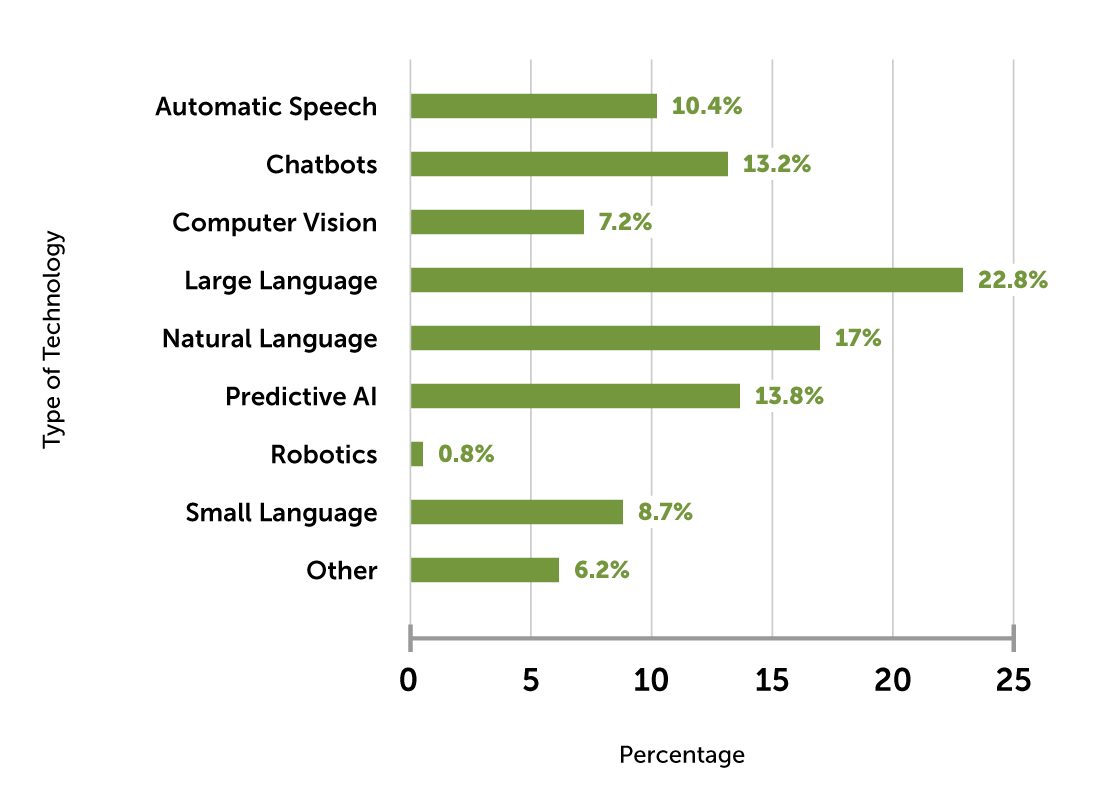

The report describes the various models respondents anticipate using in their efforts—most commonly, large language models, natural language processing, and predictive AI (Figure 2).

Figure 2. Percentage of the types of technology respondents anticipate using for their described projects (N=178 submissions).

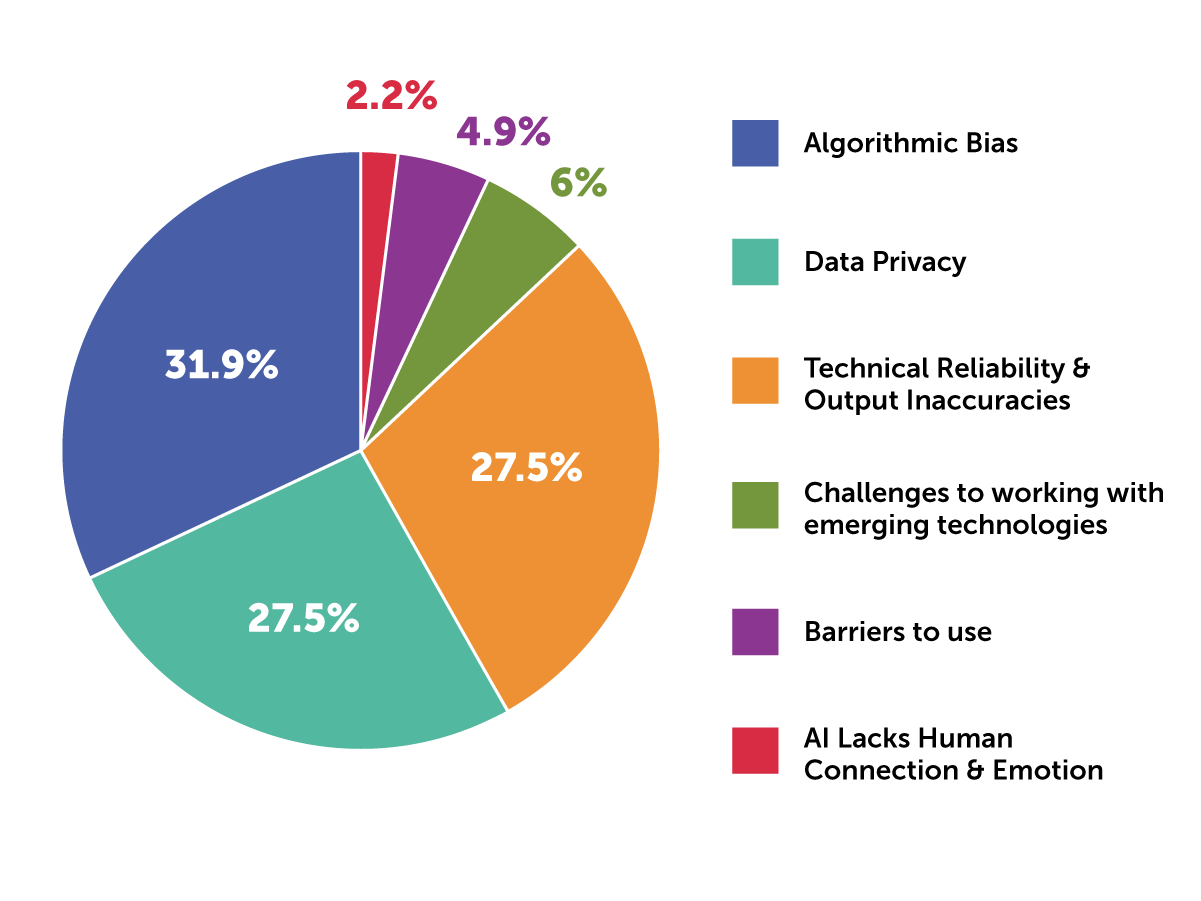

Moreover, the report focuses on the risks respondents anticipate and the mitigation strategies they identified to reduce the risks of working with AI. Algorithmic bias — meaning the data that is used in training algorithms are often unrepresentative of those from historically excluded communities, including communities of color and people with disabilities, leading to the generation of inequitable outputs — was the top risk shared across respondents, followed closely by challenges around data privacy and technical reliability (see Figure 3). This report advocates for a greater effort to share challenges and strategies around AI and models doing so by sharing specific mitigation strategies that aim to mitigate each category of risk. Overall, human involvement, or human-in-the-loop, was central to mitigation strategies across the risk categories, as people will be integral to checking the quality, accuracy, and coherence of AI-generated outputs. Specifically, there was an emphasis on teachers themselves needing to be positioned as experts in their classrooms and centered on the iterative research and development processes for AI-enabled tools.

Figure 3. Percentage of the anticipated risks described in submission responses (N=178 submissions).

The distrust of AI held by many educators, caregivers, and even students, as well as concerns about losing a human element of education, could prove a challenge to embrace the possibilities of how AI can respond to student needs. However, thoughtful and intentional ways exist to address these hurdles, as described in this report’s Risks and Mitigation Strategies section. At the core, transparency, AI literacy, and collaboration with end users from concept throughout implementation are key.

Leveraging AI-enabled technologies to drive equitable outcomes in education calls for education leaders to hold collaborative and inclusive conversations with their communities to determine how to position AI as a tool for achieving their shared vision. Driven by district priorities and subject matter experts, the field also needs to establish benchmarks to help recognize and evaluate the ethical design of AI for teaching and learning. AI developers should embrace and center the expertise of teachers and students by prioritizing co-design throughout a tool’s R&D processes. The future of AI also calls for innovations in research; there needs to be rapid and responsive, yet rigorous and reliable, research methods to meet the rate of change established by AI so the field can understand what works and what doesn’t. Each of us has a role to play in shaping the future of AI in education to ensure it leads to a more equitable education system:

Read the full report, “AI-Powered Innovations in Mathematics Teaching & Learning,” to explore these topics and more.